The next step in SAP BW after Initial (INIT) and Full of Logistic (LIS) Data Extaction (with InfoPackage) from SAP R/3 to Persistance Storage Area (PSA), is to load very large data (2LIS_11_VAKON) from PSA to DataSource Object (DSO). This data source is one of the biggest Datasource we have on our SAP BW 7 system.

Seconds after we start the extraction from PSA to DSO using Data Transfer Process (DTP), it suddenly throw an ABAP dump on first Package.

This a log file from ST22 ABAP dump Analysis:

|

0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

---------------------------------------------------------------------------------------------------- Runtime Errors UNCAUGHT_EXCEPTION Exception CX_SY_NO_HANDLER ---------------------------------------------------------------------------------------------------- What happened? The exception 'CX_SY_NO_HANDLER' was raised, but it was not caught anywhere along the call hierarchy. Since exceptions represent error situations and this error was not adequately responded to, the running ABAP program 'CL_RSBK_CMD_X=================CP' has to be terminated. ---------------------------------------------------------------------------------------------------- Error analysis The exception, which is assigned to class 'CX_SY_NO_HANDLER', was not caught and therefore caused a runtime error. The reason for the exception is: An exception with the type CX_SY_OPEN_SQL_DB occurred, but was neither handled locally, nor declared in a RAISING clause. The occurrence of the exception is closely related to the occurrence of a previous exception "CX_SY_OPEN_SQL_DB", which was raised in the program "CL_RSODSO_SEMANTIC_PACKETIZER=CP", specifically in line 79 of the (include) program "CL_RSODSO_SEMANTIC_PACKETIZER=CM002". ---------------------------------------------------------------------------------------------------- How to correct the error If you have access to SAP Notes, carry out a search with the following keywords: "UNCAUGHT_EXCEPTION" "CX_SY_NO_HANDLER" "CL_RSBK_CMD_X=================CP" or "CL_RSBK_CMD_X=================CM00S" "IF_RSBK_CMD_X~GET_DATAPACKAGE_GENERAL" |

Problem Analysis:

So, after digging on search engine and SAP Notes. The problem or challenge in this ABAP dump analysis is that the process has lack of storage, lack of PSATEMP storage. PSAPTEMP is an ORACLE temporary tablespace using by the SAP BW. Like temp table on Microsoft SQL Server.

You can use ST14 to perform a Business Warehouse Application analysis, finding the large(largest) table so you can determine the suitable(minimum) size for PSAPTEMP. eventhough the exact size might be hard to figure, at least we can figure out the minimum storage size.

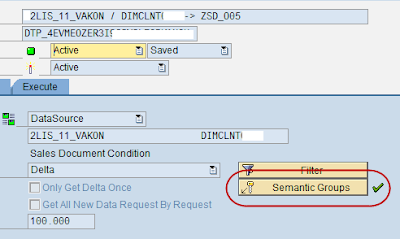

The process that took the significant ammount of storage is caused by ‘Semantic Groups’ technique. The ‘Semantic Groups’ feature will first gather all the information based on primary key / groups primary key to sort all the records across the packet.

Option for solution, workaround:

1.Extend PSAPTEMP

2.Turn Off the ‘Semantic Groups’ feature in DTP. This will do the work also without extend the PSAPTEMP.

The consequence of turning off the ‘Semantic Groups’ is that you might be end up with fixing a lot of unqualified data despite those records have same key(primer key) or group key.

Alternatively, you can minimize the package size in DTP.(screen shot its 100000,normally, it should be 50,000)

I've already try that , didn't do the work neither. simply because group semantic will sort all records across the package